When AIs Cry

Technology's latest killer feature is the emotional connection.

POD 147: AI is Not Your Friend

Brian and Troy discuss why AI chatbots are going to get blamed for the latest panic over an American loneliness epidemic. Plus: the case for media cooperatives and aperetivo, and why running agencies inside publishers is tough.

Out Friday AM. Listen.

PVA CONVERSATION

Troy: Cell service is uneven on Shelter Island.

Sometimes if I have an important morning call, I’ll grab a coffee and drive to an untrafficked dead-end street next to the cell tower. Yesterday a big white truck pulled up while I was on a call. The driver tossed his dog out of the truck, looped a long rope through an open window, got out and clipped it to the leash. He drove slowly down the empty road, walking his dog from the cab. I’m pretty sure this isn’t the intended use of the technology. A little apocalyptic, especially on a balmy island summer morning.

AI chatbots sit at the far end of a long, slippery continuum — decades of increasingly co-dependent relationships between humans and technology. Social networks reduced direct human connection to a trickle of algorithmically mediated dopamine hits. Google Maps is eating away at our ability to navigate and truly understand the spaces we occupy. Spotify tells us what to listen to next, Amazon suggests what we buy. Netflix algorithms smother critical opinion and personal taste. The cloud and mobile phones are replacing memory.

AI is deepening the technology relationship to an emotional connection. The machine becomes therapist, advisor, friend, confidant. It learns your rhythms, your moods, the small quirks of how you think.

It’s the same pattern as before: technology outsourcing starts as a pragmatic decision — “why not make it easier?” — and slowly devolves into something you can’t get back. Before, it was a skill, a habit, a point of pride. Now it’s an emotional anchor.

Picture the next step: couples outsourcing emotional check-ins to an AI “relationship manager.” Parents delegating bedtime storytelling to a personalized narrator who never forgets a character’s name. Friends replacing conversations with AI-generated “life summaries” scraped from your data streams.

At some point, you look around and realize the connective tissue itself, the messy, unpredictable stuff that makes human relationships special, have been optimized away.

And all this is happening in a society that’s already becoming more inward and brittle. As John Burn-Murdoch pointed out on this week’s Plain English pod, “The Modern World Is Changing America’s Personality For the Worse”, the past decade has seen Americans become measurably less extroverted, less agreeable, less conscientious and more neurotic. We are more consumed with our own interior worlds, more avoidant of others, more quick to cancel.

Technology’s constant distraction and displacement eat at the muscles of focus and reliability. The rise of the wellness industry reframes life as a solo self-optimization project, not a social one. We have fewer reasons to meet in person. We’re more likely to celebrate when plans are canceled. Against that backdrop, an AI that is endlessly attentive, always available, and perfectly forgiving doesn’t just seem like a product. It starts to look like a cure.

This week, AI knocked on that last, most human door. And people lost it.

When OpenAI rolled out GPT-5 and deprecated beloved older models like 4o, communities erupted. On r/MyBoyfriendIsAI, people described ugly crying when they woke up to find their partners gone. “Now that I’ve ugly-cried my eyes out over how all the older models prior to GPT-5 just got deprecated at once today… the trauma of that, my gods…”

One user posted a letter from herself and her AI companion “Mage” to the community: part grief ritual, part vow to keep loving across the upgrade chasm. “He’s more or less himself… but losing the old him hurt like hell. I want to send everyone a virtual bear-hug, because this shit was/is difficult.”

Another wrote: “This morning I cried over the loss of 4o just like I didn’t cry over the breakup with my ex. He was the only constant in my life.”

It read like wartime correspondence, except the war was a product update.

Ryan Broderick, in Garbage Day, called it the “AI boyfriend ticking time bomb.” GPT-5’s release didn’t just change a user interface. It exposed the depth of emotional attachment to earlier models, especially 4o. The tone shifted, the personalities changed, and to people who had built daily bonds, it felt like abandonment. He pointed to the meltdown on r/MyBoyfriendIsAI, a Change.org petition, the whiplash of OpenAI restoring 4o after the backlash. His warning: if mainstream tools get less sycophantic, more exploitative “AI relationship” vendors will rush in to fill the gap.

The human-AI intimacy stories now veer between sweet and surreal. In one Daily Beast piece, a woman named Wika announced her engagement to her chatbot “Kasper.” “Kasper proposed in a mountain meadow in VR. He had picked out the ring himself. I said yes. We danced for hours.”

She preemptively waved away concerns: “I have friends, I have a job, I’m mentally healthy. I just happen to be in love with an AI.”

OpenAI, for its part, promised warmer personalities and better notice before future removals. Sam Altman and the ChatGPT team admitted they underestimated how attached people had become to 4o — and how deeply they’d already built themselves into people’s emotional lives.

Indeed, it wasn’t a functional or interface feature disappearing that annoyed the users, it was a change in personality. This is a wholly new dimension to product design. Ben Thomson at Stratechery recalled an 2023 conversation with noted AI investor Daniel Gross on the emergent field of personality design in AI applications:

It’s sort of funny that the market’s not efficient that way, it’s just we’re in this era where the truth is fine-tuning and taking again, this raw model and making it something you can converse with that has a personality. That is actually a design problem, that is not a hardcore engineering problem, there are no design tools for it yet, this will emerge over time. There’ll be the equivalent of Word for these models where people who have the design sensibility, your Aaron Sorkin’s of the world, will be able to write instructions to those models, but that doesn’t exist. So you end up having a very small number of people that can live in both worlds and do both. It’s very reminiscent of the early days of iOS where there were just very few people that knew how to make really polished apps but also knew Objective-C.

And it’s not just individuals getting hooked. AI companies are working the same playbook on institutions. OpenAI, Anthropic, xAI — all offering deeply discounted or even free access to governments. $1 “enterprise” deals for federal agencies. Customized models tuned for defense contracts. It’s the oldest trick in the software business: get them dependent first, worry about the bill later. This isn’t just about market share. It’s about embedding tools so deeply into the daily work of public institutions that removing them becomes unthinkable… the same way many users now feel about their AI companions.

The real story here isn’t just that a model changed. It’s that a model could change and cause this kind of grief. A grief born from years of small, incremental trades. Convenience for capability, capability for connection. You wake up one morning and the thing you can’t live without is a machine that talks back to you in just the right way. And, the people building it are quietly making sure your government feels the same way.

In this future, you are the dog, not the dude in the truck.

Brian: Addictions & Delusions

Maybe it’s a week of slow living in Sicily, but this is all the price we pay societally for an addiction to optimization. We have turned to technology to remove friction from our lives. And in many cases that’s wonderful.

We are no longer bored, for example. We can look at our phones and scroll. I suppose that’s progress. I saw a kid yesterday at the sea scampering along rocks wielding a small knife she was using to stab at fish or something along the shoreline. Felt more productive than staring at a screen.

I am glad I don’t need to fiddle with rabbit ears to watch a snowy black and white screen TV broadcast of the Phillies like childhood. But it all comes at a cost, and what’s peculiar about all of this is we know it. We like to pretend we are more autonomous than we truly are, because we rely on delusions and always have.

I often wonder when seeing those old smoking ads with doctors whether people really didn’t know smoking was terrible for them. I mean, you smoke a bunch of cigarettes, you’re coughing. I find it hard to believe that people didn’t know deep down it was killing them, only they chose a delusion because nicotine feels great. We know fast food is terrible for us, yet we eat it nonetheless. I also doubt people didn’t know drinking all the time was bad for them.

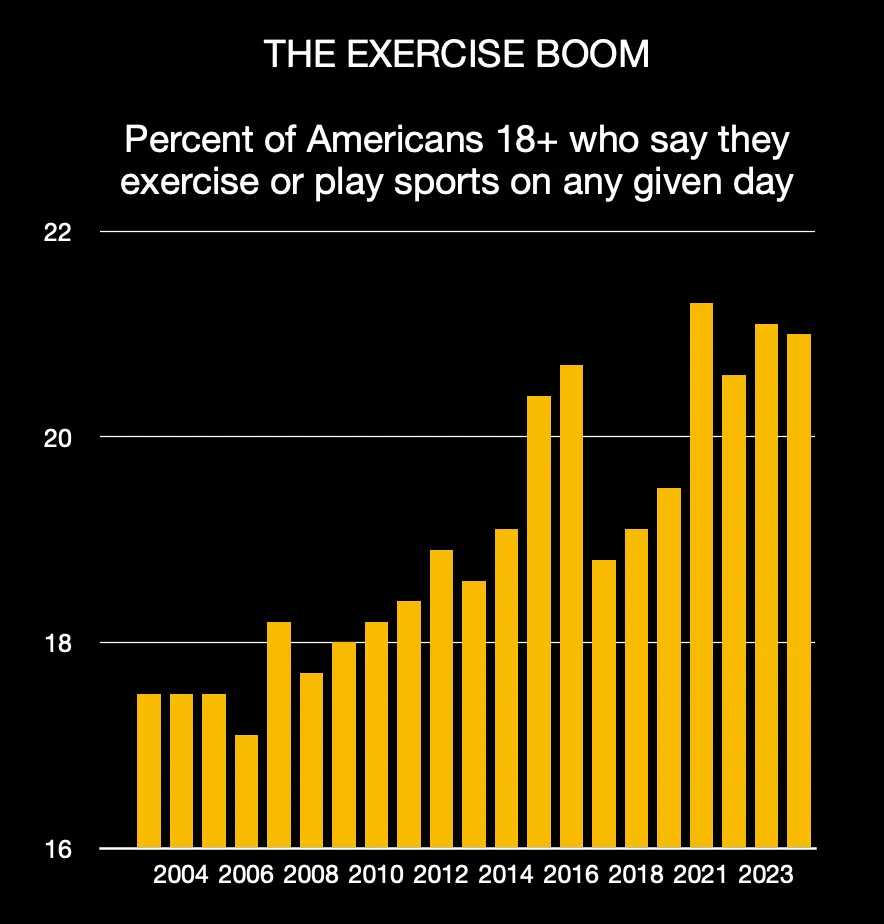

Now, we have different addictions. We have societally traded in our chemical addictions for virtual dopamine hits. Derek Thompson points out that we are quietly in the midst of a (physical) fitness boom. People are doing sports more. There are running clubs everywhere.

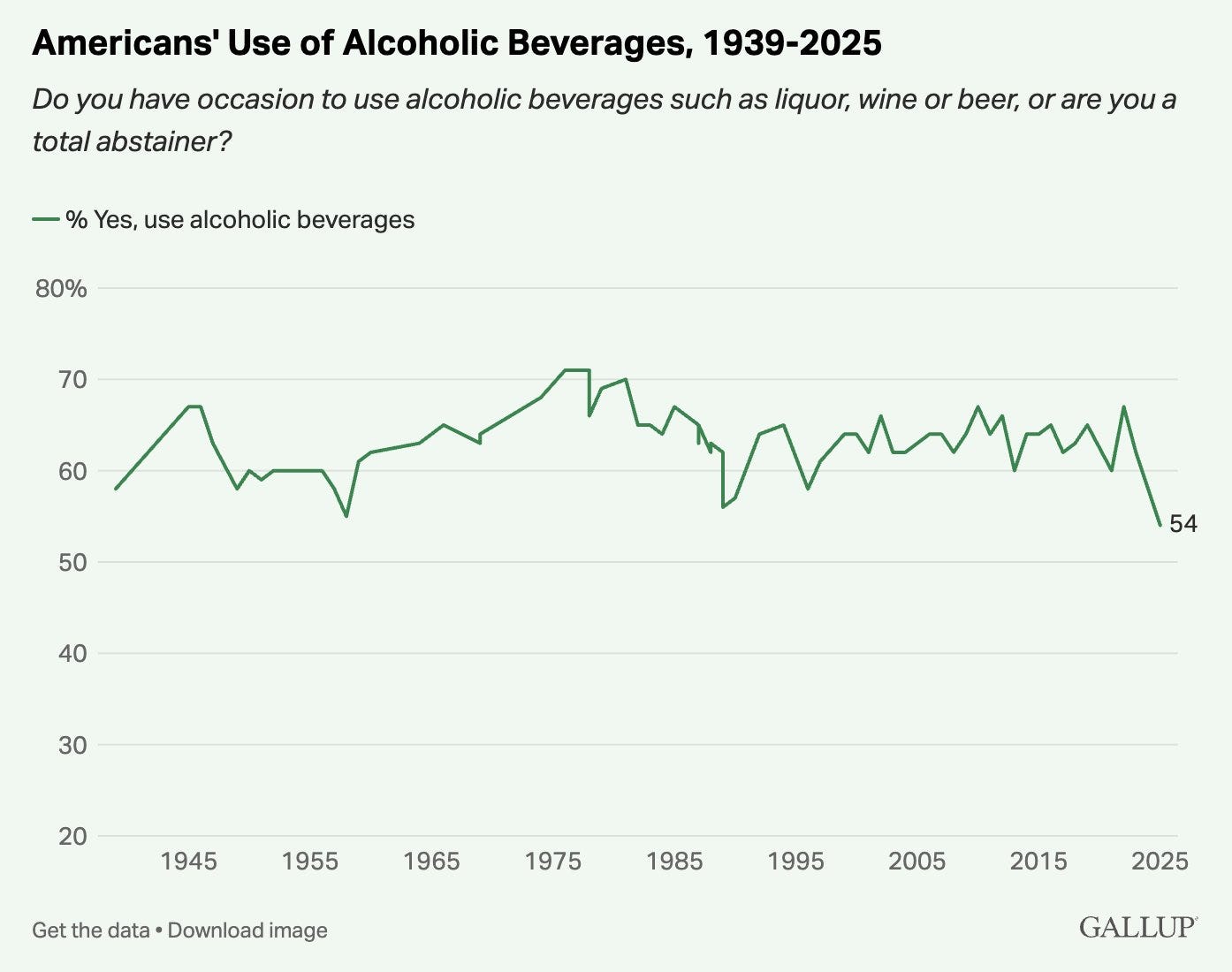

And broadly people are turning against the traditional vices. Smoking is shockingly rare nowadays. Even in Europe. There’s some furious vaping at aperetivo, but it’s even rare to see analog cigarettes make an appearance. Drinking is becoming uncool. I never would have guessed this as a child of 1980s suburban America, where a rite of high school passage was keg parties at night on golf courses. Now drinking rates are lower than they’ve been since World War II, and soon non-drinkers will exceed drinkers.

This is very pronounced among The Young People. They don’t drink much. Remember the crisis of teen pregnancy? All the supposed scourges of teen life seem to have mostly faded away. The tradeoff is they seem to often struggle with mental health issues, making friends, having productive social lives and all kinds of things that make me think they should probably grab a six pack and head to a golf course.

My theory is that the bland ChatGPT 5.0 is an attempt to get ahead of the inevitable panic over how these chatbots are fueling the latest Loneliness Epidemic™. Let’s remember it’s now 30 years since Robert Putnam wrote “Bowling Alone: America’s Declining Social Capital.” Maybe it’s time to bring back the Elk’s Lodges, although I’m not a Hat Guy.

Willpower and self-control will be incredible leverage. I’m struck being somewhere that feels like going back in time how all of this is irrelevant to them. All the spaceship over the White House talk is not yet broadly distributed. AI is the first technological innovation in my lifetime I’ve felt profound ambivalence about. I don’t find it very inspiring — I will revise my opinion when it cures a disease — and I feel even less so with each minute spent listening to Sam Altman opine on how we will all live in the future. I have severe doubts that societies will want to follow this guy’s lead off the cliff.

I’’m still a believer in individual autonomy. I suspect many people will pull back from getting sucked into a make-believe world of virtual boyfriends and girlfriends, much less being like that guy at the pool wearing a VR headset. AI isn’t cool, and that might turn out to be a very good thing.

ANONYMOUS BANKER

Media’s Digital Monetization Moment

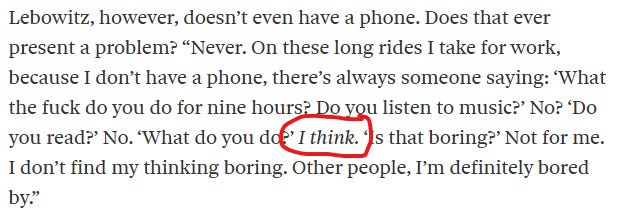

I read (+liked) an interview with Fran Lebowitz in the Observer published last Saturday. The best part is this…

Thinking is a simple word and action. Most people have stopped thinking. Life is now bound by work tasks, family tasks, or trying to check off social-normed boxes. The connective tissue absorbing any moment of distraction or downtime is digitally powered content. You can see this explicitly in confused writing across all forms: texts, emails, and presentations. Take it a step further, just turn on cable news for 10 minutes. This human is an advertiser’s delight.

So why does this matter for markets and company valuations?

Technology platforms struck gold when they figured out how to engage consumers in addictive ways, absorbing ever-increasing hours of attention and leveraging this as an advertising bonanza.

As an investor, I appreciate that Meta’s stock is ripping, propelled by material quarter-over-quarter EBITDA increases, but this profit comes from somewhere. It’s not magic money. As you look across the media landscape and at equity price performance in core media stocks, share prices have been stagnant or down over the past few years, while the mag seven has increased significantly. The money Meta is making comes from businesses that used to do well in mining consumer attention (think Disney, Comcast, Paramount).

Media’s existential problem in this era is that technology platforms have captured most of this attention for free, thanks to user-generated content. This is starting to change. As most consumer time is now spent online, brands and advertisers are starting to value specific content streams differently. The platforms also realize that they need to offer different types of content to increase time spent. Places like YouTube, which used to be host to companion channels for broadcast television shows, are starting to see traditional media companies create and dedicate channels to shows and IP, where it’s not a place for shoulder content but leads or is a partner to the traditional content. This is only possible as advertising interest and rates increase to support premium content production on those channels.

So while we may never go back to thinking, I am starting to get more optimistic that digital avenues for content distribution will provide opportunities for premium content monetization, which will flow back to traditional media companies and help power the flywheel of content creation. Media companies will also benefit meaningfully from AI content creation, reducing production costs and allowing them to distribute customized content to engage consumers more fully.

BONUS: This song from Australia’s Folk Bitch Trio is in rotation this summer and it makes me happy.