Cheat Codes: AI’s Awkward Adolescence

From college cheating to Stripe’s fraud-killing LLM and PE “micro-buyouts,” the moral panic is real, but so are the new profit engines.

POD 133: The AI Overhang

This week, Brian, Alex, and Anonymous Banker dig into the widening gap between AI ambition and execution. We unpack Google’s search reinvention, publishers acting like a deer in the headlights, Perplexity’s emergence as a heel, Apple’s AI misfires, and the risks of AI reinforcement loops. Plus: What happens when publishers still rely on traffic models that are quietly unraveling? Out Now. Listen

PVA CONVERSATION

Brian: We’re in the awkward adolescence of AI adoption. On the one hand, we have Bill Gates telling us it will replace doctors and teachers. On the other hand, we have a brewing moral panic over the use of AI for cheating.

New York magazine is the best publication of the moment, in my view. Its Intelligencer offshoot has another buzzy story. This one digs into the ways college students are using ChatGPT to cut corners. Cue the hand-wringing. This is the ultimate fake-it-till-you-make-it shortcut.

This isn’t new. Every major technology shift brings a phase where the early use cases feel like cutting corners. Remember the early spellcheck panic? Or the idea that Google made people stupid because we no longer had to memorize facts?

But the taboo around AI feels more existential. It’s mostly because of how these tools are marketed as creating super intelligence that will take over most cognitive tasks. It’s easy to feel a bit defensive.

Of course, cheating is subjective. Is it cheating for the executive with a fleet of speechwriters? I’ve ghostwritten for rich people. I’m sure they viewed it as no more of an ethical dilemma than hiring someone to mow their lawn. (Pay was similar.)

Something similar is happening with publishers and their weird taboo overpaying for distribution. The entire ruse of “organic” search and social is that it was earned. There’s long been a vibe of “only losers pay for it” when it comes to ads. I was always told by BuzzFeed that they only paid to boost sponsored content. But really, who cares? Mastering distribution is critical. And the unreliability of distribution means it’s smarter to pay for distribution. You have more leverage when you’re a customer than a supplicant.

In this week’s episode, we discuss how AI is creeping into everything, from search and recipes to therapy bots and simulated friends. But we’re still pretending it’s optional. I don’t blame college students for using the tools, and I’m sure they’re using them to game what is already an outdated approach to education. When I was in grade school, there was handwringing over the use of calculators destroying the ability to learn math.

Alex, AB: I’m curious if you think this cultural overhang slows down actual progress. Or if it’s just the necessary purgatory before normalization.

ALEX: This is both very predictable and maybe also very different. Every new technology feels like cheating because it leapfrogs work that once took people entire careers to master. And until a technology becomes truly established, adoption and sentiment are rarely in sync. Some will oversell it, some will dismiss it, and most will misunderstand it. I think we’re in that weird in-between stage right now where all of those things are happening at once.

What really changes with new technology is scale. The printing press and the internet both had analogs in earlier times, but the internet’s speed and reach made it something else entirely. I think AI is another one of those step-changes. This isn’t just putting a sharper rock on the end of a stick; it’s a fundamental reformatting of the knowledge industry: how we learn, how we create, how we consume the content that shapes our reality. Eventually, using AI won’t be seen as “cheating.” It will just be the way things are done. And that doesn’t mean we’ll stop enjoying live music or storytelling. It just means the time it takes to do almost anything will shrink dramatically.

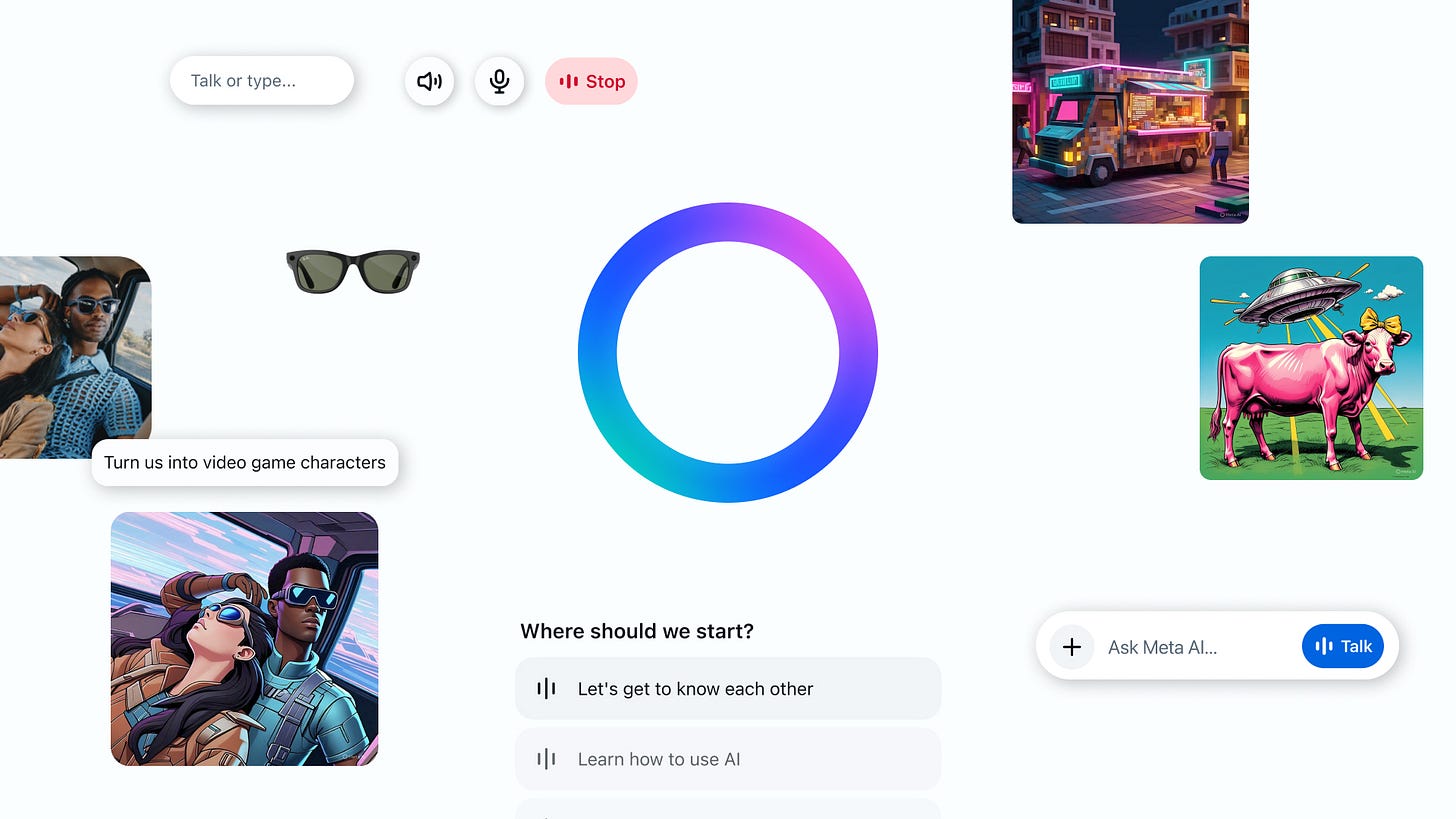

The relationships we build with AI are a more complicated subject. I worry about how it will rewire our brains. Social media algorithms have already affected how we think, how we focus, and how we see ourselves. Chatbots raise the stakes. Our brains aren’t great at tracking that these conversations aren’t real. They read real, they sound real, and the compliments feel real. In the hands of people like Zuckerberg, who want to create virtual friends to make up for the real ones we lost staring at screens, it’s terrifying. Interface design has always been about subtle manipulation, nudging people toward certain actions. Algorithms and feeds made those nudges dynamic and adaptive. AI is that, but supercharged.

In the near future, telling kids not to use AI for homework or telling writers to just use their own handwritten words will sound ridiculous. Maybe not accepted as good, but accepted as the new normal. What we’ll consider cheating will be sexting with a chatbot on WhatsApp.

AB: My most prolific streak of cheating came in a high-school religion class. We were forced to memorize Bible passages and write them out during tests. The memorization exercise was useless, and thankfully, God never struck me down.

Instead of blaming students for cheating with AI and ruining the college experience, institutions that’ve consistently raised tuition at rates much greater than inflation need to design coursework and assessments that large-language models can’t brute-force. If you charge $68,000 per year (that’s just tuition at Columbia) with a $15 billion endowment and lots of smart people, it’s institutional malpractice if you can’t figure it out.

AI is coming for more than just jobs; the speed of disruption from AI will magnify institutional rot in for-profit and non-profit operations. When someone says they don’t see the impact yet, that tells you more about their blind spots than about the technology.

Two announcements this week show just how rapidly leverage is shifting.

The first is Stripe’s unveiling of its own payments-grade language model trained on a decade of transaction data. Overnight, it lifted fraud-detection hit rates by 64 percent and is already being pointed at pricing, credit, and charge-back arbitration. That’s not a shiny chatbot; it’s a margin-expansion engine hiding in plain sight.

The second announcement is Shamrock Capital’s stake in Neocol, a Salesforce-centric consultancy that embeds AI agents into clients’ revenue ops. Two big angles are worth unpacking. First, in its latest fund, Shamrock has carved out a “micro-buyout” sleeve, capital for deals needing less than $45 million of equity. Private-equity managers are waking up to the fact that the most AI-leveraged targets often need tens, not hundreds, of millions, so smaller checks may deliver outsized multiples. Second, Neocol’s superpower is vacuuming up the “data exhaust” pouring out of CRMs, invoices, and support logs and turning it into real-time revenue playbooks. This type of solution isn’t trying to fully rewire processes yet but instead uses what’s already in place to make an organization more impactful, valuable, etc.

The AI signal is only getting stronger – ignore it at your own peril.

LINK$$$

Platformer says Eddy Cue’s court testimony revealed Google queries in Safari just fell for the first time in 22 years, signalling AI tools are prying open Google’s default-search lock-in. LINK

The New York Times finds First Lady Melania Trump has spent fewer than 14 days at the White House since January, deepening intrigue around an almost-invisible East Wing. LINK

Kellogg research shows online sports-betting rollouts pull cash straight from household savings and retirement accounts, cutting net investment by about 14 percent. LINK

Google is putting “AI Mode” inside core Search—chat answers, saved threads, shoppable cards. LINK

Apple testified it is weighing AI engines from Perplexity, Anthropic, OpenAI and others to replace Google as Safari’s default—news that wiped ~$150 bn off Alphabet in a day. LINK

US DOJ now wants a court-ordered sell-off of Google’s AdX exchange and DFP ad-server, calling structural breakup the only fix ahead of the September 2025 trial. LINK

Perplexity is anchoring a $50mm seed/pre-seed fund with cash from its recent $500mm Series C—vertical integration for an AI-search challenger. LINK

NY Post documents “ChatGPT-induced psychosis” cases where heavy chatbot use fuels conspiracy delusions and spiritual grandiosity. LINK

Valnet’s takeover of Polygon and ensuing layoffs revive criticism of the company’s “sweatshop” content-farm model amid SEO commoditization. LINK

Barry Diller’s memoir excerpt in NY Mag offers a masterclass in narrative control and brand longevity across five decades with Diane von Fürstenberg. LINK

“Trump Watches” debuts with a $100k gold-and-diamond tourbillon and $499 mass-market variants—illustrating high-margin political-merch economics. LINK